고정 헤더 영역

상세 컨텐츠

본문

지난 포스팅을 통해 RAID 개념과 구축 방법에 대해 알아보았습니다.

이번 포스팅에서는 디스크 불량 또는 불의의 사고에 의해 구성된 RAID가 오동작 또는 장애 발생시 조치하는 방법에 대해 알아보도록 하겠습니다.

RAID 장애 조치 방법을 테스트 또는 직접 구현해보고 싶은 분은 지난 포스팅을 참고하여 환경 구축 후 본 포스팅 내용을 따라해보시는 것이 좋습니다.

[CentOS 8] Disk 관리 | RAID 개념

RAID는 여러 개의 하드디스크를 하나의 디스크처럼 사용하는 방식입니다. 비용을 절감하면서도 신뢰성을 높이며, 성능까지 향상시킬 수 있습니다. RAID는 디스크 관리 영역에서 중요한 요소로, 대

mpjamong.tistory.com

[CentOS 8] Disk 관리 | RAID 구축

RAID의 개념에 대해 이전 포스팅에서 설명을 드렸고, 이번 포스팅에서는 각각의 RAID를 CentOS 8 에서 어떻게 구축하는지 그 방법에 대해 설명을 드리고자 합니다. [CentOS 8] Disk 관리 | RAID 개념 RAID는

mpjamong.tistory.com

1. 디스크 관리 | RAID 장애 테스트 준비

- 기 구축된 RAID 볼륨에서 아래 디스크를 제거하여 가상의 장애 환경을 준비합니다.

- 기 구축된 5개의 RAID 장애/복구 테스트를 동시에 진행시 혼돈이 있을 수 있어 각 RAID 별로 진행하도록 하겠습니다.

- Linear RAID, RAID 0 은 결함 허용 X : 장애시 응급 모드로 부팅

- RAID 1, RAID 5, RAID 10 은 결함 허용 O : 디스크 교체 후 RAID에 신규 디스크 연동하여 사용 가능

- 기 구축된 RAID 에서 사용 중인 디스크와 장애 디스크

- Linear RAID : /dev/sdb, /dev/sdc --> /dev/sdc (제거)

- RAID 0 : /dev/sdd, /dev/sde --> /dev/sde (제거)

- RAID 1 : /dev/sdf, /dev/sdg --> /dev/sdg (제거)

- RAID 5 : /dev/sdh, /dev/sdi, /dev/sdj --> /dev/sdi (제거)

- RAID 10 : /dev/sdk, /dev/sdl, /dev/sdm, /dev/sdn --> /dev/sdl, /dev/sdn (제거)

2. 디스크 관리 | Linear RAID 장애와 복구 테스트

- 장애 테스트 준비 사항으로 /dev/sdc 디스크를 제거 합니다.

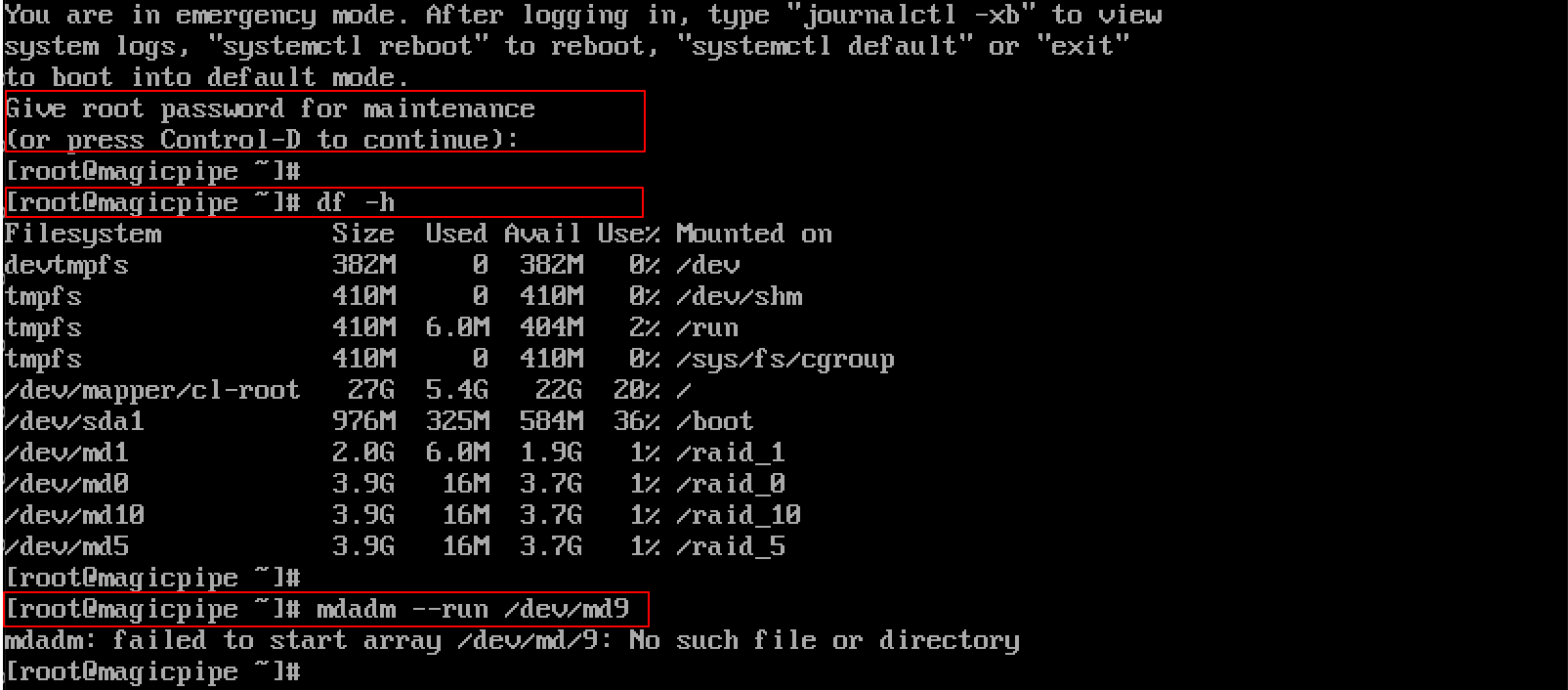

- 장애 확인

- 시스템을 재시작하면 정상적으로 부팅이 되지 않고 응급 모드로 접속이 됩니다.

- Root 계정의 패스워드를 입력 후 시스템을 접속 합니다.

- Lineaer RAID 디렉토리가 보이지 않고, 재시작 하여도 실행이 되지 않습니다.

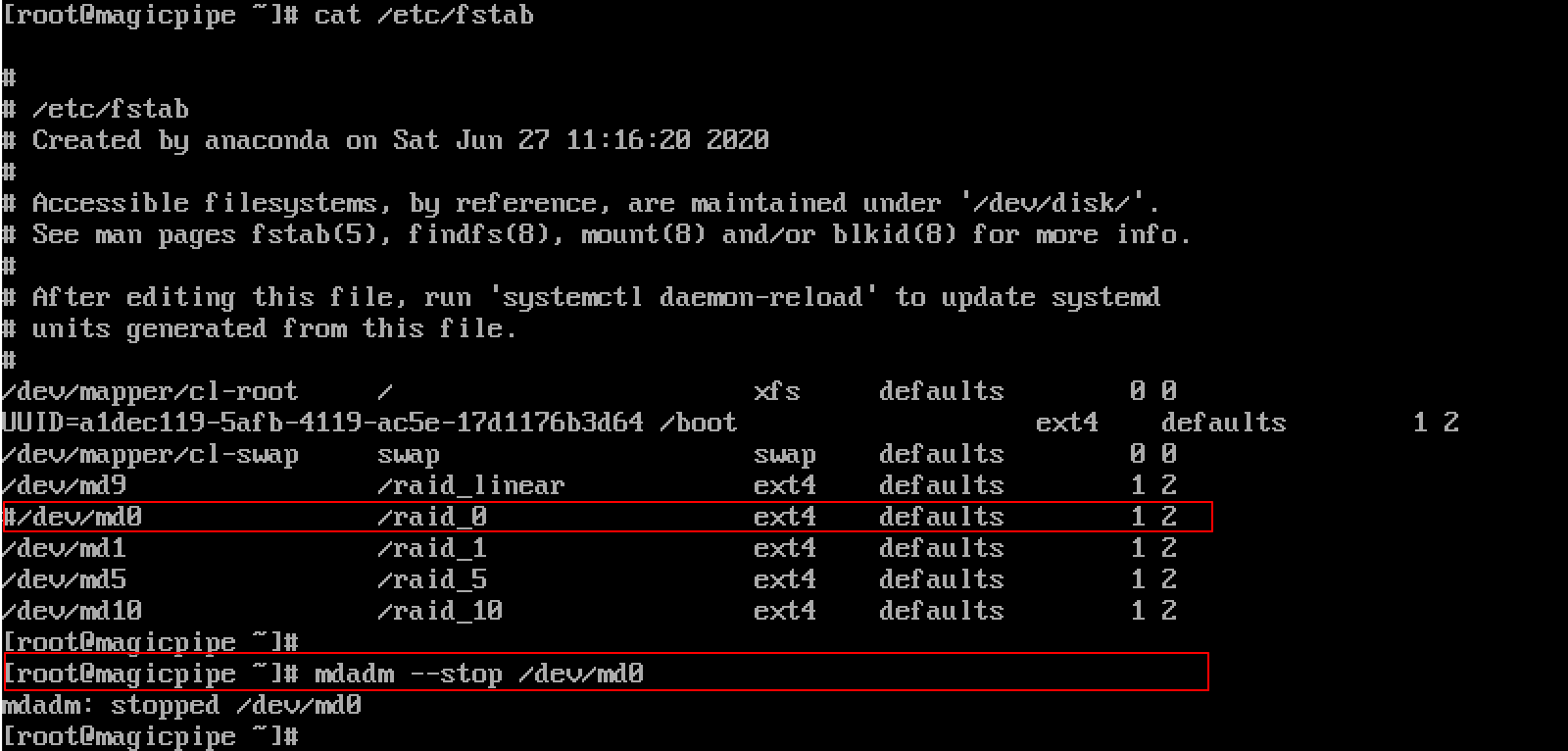

- 조치 방법

- Linear RAID를 중지, /etc/fstab 에서 Lineaer RAID 설정을 주석(설정 앞에 '#' 추가) 처리 후 시스템 중지

- 동일 HDD로 교체 후 시스템 시작시 정상 부팅

- Linear RAID 복구

# 기존 sdc 디스크 인식 불가 확인

[root@magicpipe ~]# fdisk -l

...

Disk /dev/sdb: 2 GiB, 2147483648 bytes, 4194304 sectors

Units: sectors of 1 * 512 = 512 bytes

Sector size (logical/physical): 512 bytes / 512 bytes

I/O size (minimum/optimal): 512 bytes / 512 bytes

Disklabel type: dos

Disk identifier: 0x9fc3be8c

Device Boot Start End Sectors Size Id Type

/dev/sdb1 2048 4194303 4192256 2G fd Linux raid autodetect

Disk /dev/sdc: 2 GiB, 2147483648 bytes, 4194304 sectors

Units: sectors of 1 * 512 = 512 bytes

Sector size (logical/physical): 512 bytes / 512 bytes

I/O size (minimum/optimal): 512 bytes / 512 bytes

...

# 신규 디스크 파일 시스템 설정

[root@magicpipe ~]# fdisk /dev/sdc

Welcome to fdisk (util-linux 2.32.1).

Changes will remain in memory only, until you decide to write them.

Be careful before using the write command.

Device does not contain a recognized partition table.

Created a new DOS disklabel with disk identifier 0xc10cf74b.

Command (m for help): n

Partition type

p primary (0 primary, 0 extended, 4 free)

e extended (container for logical partitions)

Select (default p): p

Partition number (1-4, default 1): 1

First sector (2048-4194303, default 2048): ENTER

Last sector, +sectors or +size{K,M,G,T,P} (2048-4194303, default 4194303): ENTER

Created a new partition 1 of type 'Linux' and of size 2 GiB.

Command (m for help): t

Selected partition 1

Hex code (type L to list all codes): fd

Changed type of partition 'Linux' to 'Linux raid autodetect'.

Command (m for help): p

Disk /dev/sdc: 2 GiB, 2147483648 bytes, 4194304 sectors

Units: sectors of 1 * 512 = 512 bytes

Sector size (logical/physical): 512 bytes / 512 bytes

I/O size (minimum/optimal): 512 bytes / 512 bytes

Disklabel type: dos

Disk identifier: 0xc10cf74b

Device Boot Start End Sectors Size Id Type

/dev/sdc1 2048 4194303 4192256 2G fd Linux raid autodetect

Command (m for help): w

The partition table has been altered.

Calling ioctl() to re-read partition table.

Syncing disks.

# 기존 구성된 Linear RAID 중지 후 새로 구성

[root@magicpipe ~]# mdadm --stop /dev/md9

mdadm: stopped /dev/md9

[root@magicpipe ~]# mdadm --create /dev/md9 --level=linear --raid-devices=2 /dev/sdb1 /dev/sdc1

mdadm: /dev/sdb1 appears to be part of a raid array:

level=linear devices=2 ctime=Tue Jul 28 12:09:53 2020

Continue creating array? y

mdadm: Defaulting to version 1.2 metadata

mdadm: array /dev/md9 started.

# Linear RAID 동작 확인

[root@magicpipe ~]# mdadm --detail /dev/md9

/dev/md9:

Version : 1.2

Creation Time : Fri Sep 4 18:01:04 2020

Raid Level : linear

Array Size : 4188160 (3.99 GiB 4.29 GB)

Raid Devices : 2

Total Devices : 2

Persistence : Superblock is persistent

Update Time : Fri Sep 4 18:01:04 2020

State : clean

Active Devices : 2

Working Devices : 2

Failed Devices : 0

Spare Devices : 0

Rounding : 0K

Consistency Policy : none

Name : magicpipe:9 (local to host magicpipe)

UUID : 0c15c8da:603bab34:fd55a707:c971cc4d

Events : 0

Number Major Minor RaidDevice State

0 8 17 0 active sync /dev/sdb1

1 8 33 1 active sync /dev/sdc1

# /etc/fstab 재설정(주석 해제)

[root@magicpipe ~]# vi /etc/fstab

# /etc/fstab

# Created by anaconda on Sat Jun 27 11:16:20 2020

#

# Accessible filesystems, by reference, are maintained under '/dev/disk/'.

# See man pages fstab(5), findfs(8), mount(8) and/or blkid(8) for more info.

#

# After editing this file, run 'systemctl daemon-reload' to update systemd

# units generated from this file.

#

/dev/mapper/cl-root / xfs defaults 0 0

UUID=a1dec119-5afb-4119-ac5e-17d1176b3d64 /boot ext4 defaults 1 2

/dev/mapper/cl-swap swap swap defaults 0 0

/dev/md9 /raid_linear ext4 defaults 1 2

/dev/md0 /raid_0 ext4 defaults 1 2

/dev/md1 /raid_1 ext4 defaults 1 2

/dev/md5 /raid_5 ext4 defaults 1 2

/dev/md10 /raid_10 ext4 defaults 1 2

3. 디스크 관리 | RAID 0 장애와 복구 테스트

- 장애 테스트 준비 사항으로 /dev/sde 디스크를 제거 합니다.

- 장애 확인

- 시스템을 재시작하면 정상적으로 부팅이 되지 않고 응급 모드로 접속이 됩니다.

- Root 계정의 패스워드를 입력 후 시스템을 접속 합니다.

- RAID 0 디렉토리가 보이지 않고, 재시작 하여도 실행이 되지 않습니다.

- 조치 방법

- RAID 0를 중지, /etc/fstab 에서 RAID 0 설정을 주석(설정 앞에 '#' 추가) 처리 후 시스템 중지

- 동일 HDD로 교체 후 시스템 시작시 정상 부팅

- RAID 0 복구

# 기존 sde 디스크 인식 불가 확인

[root@magicpipe ~]# fdisk -l

...

Disk /dev/sdd: 2 GiB, 2147483648 bytes, 4194304 sectors

Units: sectors of 1 * 512 = 512 bytes

Sector size (logical/physical): 512 bytes / 512 bytes

I/O size (minimum/optimal): 512 bytes / 512 bytes

Disklabel type: dos

Disk identifier: 0xd4450972

Device Boot Start End Sectors Size Id Type

/dev/sdd1 2048 4194303 4192256 2G fd Linux raid autodetect

Disk /dev/sde: 2 GiB, 2147483648 bytes, 4194304 sectors

Units: sectors of 1 * 512 = 512 bytes

Sector size (logical/physical): 512 bytes / 512 bytes

I/O size (minimum/optimal): 512 bytes / 512 bytes

...

# 신규 디스크 파일 시스템 설정

[root@magicpipe ~]# fdisk /dev/sde

Welcome to fdisk (util-linux 2.32.1).

Changes will remain in memory only, until you decide to write them.

Be careful before using the write command.

Device does not contain a recognized partition table.

Created a new DOS disklabel with disk identifier 0x8e8cb647.

Command (m for help): n

Partition type

p primary (0 primary, 0 extended, 4 free)

e extended (container for logical partitions)

Select (default p): p

Partition number (1-4, default 1): 1

First sector (2048-4194303, default 2048): ENTER

Last sector, +sectors or +size{K,M,G,T,P} (2048-4194303, default 4194303): ENTER

Created a new partition 1 of type 'Linux' and of size 2 GiB.

Command (m for help): t

Selected partition 1

Hex code (type L to list all codes): fd

Changed type of partition 'Linux' to 'Linux raid autodetect'.

Command (m for help): p

Disk /dev/sde: 2 GiB, 2147483648 bytes, 4194304 sectors

Units: sectors of 1 * 512 = 512 bytes

Sector size (logical/physical): 512 bytes / 512 bytes

I/O size (minimum/optimal): 512 bytes / 512 bytes

Disklabel type: dos

Disk identifier: 0x8e8cb647

Device Boot Start End Sectors Size Id Type

/dev/sde1 2048 4194303 4192256 2G fd Linux raid autodetect

Command (m for help): w

The partition table has been altered.

Calling ioctl() to re-read partition table.

Syncing disks.

# 기존 구성된 RAID 0 중지 후 새로 구성

[root@magicpipe ~]# mdadm --stop /dev/md0

mdadm: stopped /dev/md0

[root@magicpipe ~]# mdadm --create /dev/md0 --level=0 --raid-devices=2 /dev/sdd1 /dev/sde1

mdadm: /dev/sdd1 appears to be part of a raid array:

level=raid0 devices=2 ctime=Tue Jul 28 12:43:32 2020

Continue creating array? y

mdadm: Defaulting to version 1.2 metadata

mdadm: array /dev/md0 started.

# RAID 0 동작 확인

[root@magicpipe ~]# mdadm --detail /dev/md0

/dev/md0:

Version : 1.2

Creation Time : Sat Sep 5 10:37:03 2020

Raid Level : raid0

Array Size : 4188160 (3.99 GiB 4.29 GB)

Raid Devices : 2

Total Devices : 2

Persistence : Superblock is persistent

Update Time : Sat Sep 5 10:37:03 2020

State : clean

Active Devices : 2

Working Devices : 2

Failed Devices : 0

Spare Devices : 0

Layout : -unknown-

Chunk Size : 512K

Consistency Policy : none

Name : magicpipe:0 (local to host magicpipe)

UUID : e761738d:147207af:6a5cfc3c:257a9332

Events : 0

Number Major Minor RaidDevice State

0 8 49 0 active sync /dev/sdd1

1 8 65 1 active sync /dev/sde1

# /etc/fstab 재설정(주석 해제)

[root@magicpipe ~]# vi /etc/fstab

# /etc/fstab

# Created by anaconda on Sat Jun 27 11:16:20 2020

#

# Accessible filesystems, by reference, are maintained under '/dev/disk/'.

# See man pages fstab(5), findfs(8), mount(8) and/or blkid(8) for more info.

#

# After editing this file, run 'systemctl daemon-reload' to update systemd

# units generated from this file.

#

/dev/mapper/cl-root / xfs defaults 0 0

UUID=a1dec119-5afb-4119-ac5e-17d1176b3d64 /boot ext4 defaults 1 2

/dev/mapper/cl-swap swap swap defaults 0 0

/dev/md9 /raid_linear ext4 defaults 1 2

/dev/md0 /raid_0 ext4 defaults 1 2

/dev/md1 /raid_1 ext4 defaults 1 2

/dev/md5 /raid_5 ext4 defaults 1 2

/dev/md10 /raid_10 ext4 defaults 1 2

4. 디스크 관리 | RAID 1 장애와 복구 테스트

- 장애 테스트 준비 사항으로 /dev/sdg 디스크를 제거 합니다.

- 장애 확인

- RAID 1 은 결함 허용을 지원하기 때문에 디스크가 장애가 발생 정상 부팅이 됩니다.

# RAID 1 Mount 확인

[root@magicpipe ~]# df -h

Filesystem Size Used Avail Use% Mounted on

devtmpfs 382M 0 382M 0% /dev

tmpfs 410M 0 410M 0% /dev/shm

tmpfs 410M 6.5M 403M 2% /run

tmpfs 410M 0 410M 0% /sys/fs/cgroup

/dev/mapper/cl-root 27G 5.4G 22G 20% /

/dev/sda1 976M 325M 584M 36% /boot

/dev/md5 3.9G 16M 3.7G 1% /raid_5

/dev/md9 3.9G 16M 3.7G 1% /raid_linear

/dev/md0 3.9G 16M 3.7G 1% /raid_0

/dev/md10 3.9G 16M 3.7G 1% /raid_10

/dev/md1 2.0G 6.0M 1.9G 1% /raid_1

tmpfs 82M 1.2M 81M 2% /run/user/42

tmpfs 82M 4.0K 82M 1% /run/user/0

# RAID 1 상태 확인

[root@magicpipe ~]# mdadm --detail /dev/md1

/dev/md1:

Version : 1.2

Creation Time : Tue Jul 28 12:49:36 2020

Raid Level : raid1

Array Size : 2094080 (2045.00 MiB 2144.34 MB)

Used Dev Size : 2094080 (2045.00 MiB 2144.34 MB)

Raid Devices : 2

Total Devices : 1

Persistence : Superblock is persistent

Update Time : Sat Sep 5 11:02:46 2020

State : clean, degraded

Active Devices : 1

Working Devices : 1

Failed Devices : 0

Spare Devices : 0

Consistency Policy : resync

Name : magicpipe:1 (local to host magicpipe)

UUID : 981f868b:ff2e320a:41c7d206:f6a85369

Events : 19

Number Major Minor RaidDevice State

0 8 81 0 active sync /dev/sdf1

- 0 0 1 removed

- RAID 1 복구

- 동일 HDD로 교체 후 아래와 같이 기존 RAID 0 에 HDD를 추가 후 정상 동작을 확인 합니다.

# 교체한 신규 HDD 확인

[root@magicpipe ~]# fdisk -l

...

Disk /dev/sdf: 2 GiB, 2147483648 bytes, 4194304 sectors

Units: sectors of 1 * 512 = 512 bytes

Sector size (logical/physical): 512 bytes / 512 bytes

I/O size (minimum/optimal): 512 bytes / 512 bytes

Disklabel type: dos

Disk identifier: 0xa1668631

Device Boot Start End Sectors Size Id Type

/dev/sdf1 2048 4194303 4192256 2G fd Linux raid autodetect

Disk /dev/sdg: 2 GiB, 2147483648 bytes, 4194304 sectors

Units: sectors of 1 * 512 = 512 bytes

Sector size (logical/physical): 512 bytes / 512 bytes

I/O size (minimum/optimal): 512 bytes / 512 bytes

...

# 교체한 신규 HDD 파일시스템 설정

[root@magicpipe ~]# fdisk /dev/sdg

Welcome to fdisk (util-linux 2.32.1).

Changes will remain in memory only, until you decide to write them.

Be careful before using the write command.

Device does not contain a recognized partition table.

Created a new DOS disklabel with disk identifier 0xa107e7c0.

Command (m for help): n

Partition type

p primary (0 primary, 0 extended, 4 free)

e extended (container for logical partitions)

Select (default p): p

Partition number (1-4, default 1): 1

First sector (2048-4194303, default 2048): ENTER

Last sector, +sectors or +size{K,M,G,T,P} (2048-4194303, default 4194303): ENTER

Created a new partition 1 of type 'Linux' and of size 2 GiB.

Command (m for help): t

Selected partition 1

Hex code (type L to list all codes): fd

Changed type of partition 'Linux' to 'Linux raid autodetect'.

Command (m for help): p

Disk /dev/sdg: 2 GiB, 2147483648 bytes, 4194304 sectors

Units: sectors of 1 * 512 = 512 bytes

Sector size (logical/physical): 512 bytes / 512 bytes

I/O size (minimum/optimal): 512 bytes / 512 bytes

Disklabel type: dos

Disk identifier: 0xa107e7c0

Device Boot Start End Sectors Size Id Type

/dev/sdg1 2048 4194303 4192256 2G fd Linux raid autodetect

Command (m for help): w

The partition table has been altered.

Calling ioctl() to re-read partition table.

Syncing disks.

# RAID 1에 교체된 신규 HDD를 추가

[root@magicpipe ~]# mdadm /dev/md1 --add /dev/sdg1

mdadm: added /dev/sdg1

# RAID 1 상태 확인

[root@magicpipe ~]# mdadm --detail /dev/md1

/dev/md1:

Version : 1.2

Creation Time : Tue Jul 28 12:49:36 2020

Raid Level : raid1

Array Size : 2094080 (2045.00 MiB 2144.34 MB)

Used Dev Size : 2094080 (2045.00 MiB 2144.34 MB)

Raid Devices : 2

Total Devices : 2

Persistence : Superblock is persistent

Update Time : Sat Sep 5 11:11:13 2020

State : clean

Active Devices : 2

Working Devices : 2

Failed Devices : 0

Spare Devices : 0

Consistency Policy : resync

Name : magicpipe:1 (local to host magicpipe)

UUID : 981f868b:ff2e320a:41c7d206:f6a85369

Events : 44

Number Major Minor RaidDevice State

0 8 81 0 active sync /dev/sdf1

2 8 97 1 active sync /dev/sdg1

5. 디스크 관리 | RAID 5 장애와 복구 테스트

- 장애 테스트 준비 사항으로 /dev/sdi 디스크를 제거 합니다.

- 장애 확인

- RAID 5 는 결함 허용을 지원하기 때문에 디스크가 장애가 발생해도 정상 부팅이 됩니다.

# RAID 5 Mount 확인

[root@magicpipe ~]# df -h

Filesystem Size Used Avail Use% Mounted on

devtmpfs 382M 0 382M 0% /dev

tmpfs 410M 0 410M 0% /dev/shm

tmpfs 410M 6.4M 403M 2% /run

tmpfs 410M 0 410M 0% /sys/fs/cgroup

/dev/mapper/cl-root 27G 5.4G 22G 20% /

/dev/sda1 976M 325M 584M 36% /boot

/dev/md10 3.9G 16M 3.7G 1% /raid_10

/dev/md0 3.9G 16M 3.7G 1% /raid_0

/dev/md9 3.9G 16M 3.7G 1% /raid_linear

/dev/md1 2.0G 6.0M 1.9G 1% /raid_1

/dev/md5 3.9G 16M 3.7G 1% /raid_5

tmpfs 82M 1.2M 81M 2% /run/user/42

tmpfs 82M 4.0K 82M 1% /run/user/0

# RAID 5 상태 확인

[root@magicpipe ~]# mdadm --detail /dev/md5

/dev/md5:

Version : 1.2

Creation Time : Tue Jul 28 12:53:40 2020

Raid Level : raid5

Array Size : 4188160 (3.99 GiB 4.29 GB)

Used Dev Size : 2094080 (2045.00 MiB 2144.34 MB)

Raid Devices : 3

Total Devices : 2

Persistence : Superblock is persistent

Update Time : Sat Sep 5 17:07:31 2020

State : clean, degraded

Active Devices : 2

Working Devices : 2

Failed Devices : 0

Spare Devices : 0

Layout : left-symmetric

Chunk Size : 512K

Consistency Policy : resync

Name : magicpipe:5 (local to host magicpipe)

UUID : 5742527a:54cd71b9:66b76345:a3517f91

Events : 20

Number Major Minor RaidDevice State

0 8 113 0 active sync /dev/sdh1

- 0 0 1 removed

3 8 129 2 active sync /dev/sdi1

- RAID 5 복구

- 동일 HDD로 교체 후 아래와 같이 기존 RAID 5 에 HDD를 추가 후 정상 동작을 확인 합니다.

# 교체한 신규 HDD 확인

[root@magicpipe ~]# fdisk -l

...

Disk /dev/sdh: 2 GiB, 2147483648 bytes, 4194304 sectors

Units: sectors of 1 * 512 = 512 bytes

Sector size (logical/physical): 512 bytes / 512 bytes

I/O size (minimum/optimal): 512 bytes / 512 bytes

Disklabel type: dos

Disk identifier: 0xf4bb6d03

Device Boot Start End Sectors Size Id Type

/dev/sdh1 2048 4194303 4192256 2G fd Linux raid autodetect

Disk /dev/sdi: 2 GiB, 2147483648 bytes, 4194304 sectors

Units: sectors of 1 * 512 = 512 bytes

Sector size (logical/physical): 512 bytes / 512 bytes

I/O size (minimum/optimal): 512 bytes / 512 bytes

Disk /dev/sdj: 2 GiB, 2147483648 bytes, 4194304 sectors

Units: sectors of 1 * 512 = 512 bytes

Sector size (logical/physical): 512 bytes / 512 bytes

I/O size (minimum/optimal): 512 bytes / 512 bytes

Disklabel type: dos

Disk identifier: 0x5e75bfbf

Device Boot Start End Sectors Size Id Type

/dev/sdj1 2048 4194303 4192256 2G fd Linux raid autodetect

...

# 교체한 신규 HDD 파일시스템 설정

[root@magicpipe ~]# fdisk /dev/sdi

Welcome to fdisk (util-linux 2.32.1).

Changes will remain in memory only, until you decide to write them.

Be careful before using the write command.

Device does not contain a recognized partition table.

Created a new DOS disklabel with disk identifier 0x584f063a.

Command (m for help): n

Partition type

p primary (0 primary, 0 extended, 4 free)

e extended (container for logical partitions)

Select (default p): p

Partition number (1-4, default 1): 1

First sector (2048-4194303, default 2048): ENTER

Last sector, +sectors or +size{K,M,G,T,P} (2048-4194303, default 4194303): ENTER

Created a new partition 1 of type 'Linux' and of size 2 GiB.

Command (m for help): t

Selected partition 1

Hex code (type L to list all codes): fd

Changed type of partition 'Linux' to 'Linux raid autodetect'.

Command (m for help): p

Disk /dev/sdi: 2 GiB, 2147483648 bytes, 4194304 sectors

Units: sectors of 1 * 512 = 512 bytes

Sector size (logical/physical): 512 bytes / 512 bytes

I/O size (minimum/optimal): 512 bytes / 512 bytes

Disklabel type: dos

Disk identifier: 0x584f063a

Device Boot Start End Sectors Size Id Type

/dev/sdi1 2048 4194303 4192256 2G fd Linux raid autodetect

Command (m for help): w

The partition table has been altered.

Calling ioctl() to re-read partition table.

Syncing disks.

# RAID 5에 교체된 신규 HDD를 추가

[root@magicpipe ~]# mdadm /dev/md5 --add /dev/sdi1

mdadm: added /dev/sdi1

# RAID 5 상태 확인

[root@magicpipe ~]# mdadm --detail /dev/md5

/dev/md5:

Version : 1.2

Creation Time : Tue Jul 28 12:53:40 2020

Raid Level : raid5

Array Size : 4188160 (3.99 GiB 4.29 GB)

Used Dev Size : 2094080 (2045.00 MiB 2144.34 MB)

Raid Devices : 3

Total Devices : 3

Persistence : Superblock is persistent

Update Time : Sat Sep 5 17:18:37 2020

State : clean

Active Devices : 3

Working Devices : 3

Failed Devices : 0

Spare Devices : 0

Layout : left-symmetric

Chunk Size : 512K

Consistency Policy : resync

Name : magicpipe:5 (local to host magicpipe)

UUID : 5742527a:54cd71b9:66b76345:a3517f91

Events : 45

Number Major Minor RaidDevice State

0 8 113 0 active sync /dev/sdh1

4 8 129 1 active sync /dev/sdi1

3 8 145 2 active sync /dev/sdj1

6. 디스크 관리 | RAID 10 장애와 복구 테스트

- 장애 테스트 준비 사항으로 /dev/sdl, /dev/sdn 디스크를 제거 합니다.

- 장애 확인

- RAID 10 는 결함 허용을 지원하기 때문에 디스크가 장애가 발생해도 정상 부팅이 됩니다.

# RAID 10 Mount 확인

[root@magicpipe ~]# df -h

Filesystem Size Used Avail Use% Mounted on

devtmpfs 382M 0 382M 0% /dev

tmpfs 410M 0 410M 0% /dev/shm

tmpfs 410M 6.4M 403M 2% /run

tmpfs 410M 0 410M 0% /sys/fs/cgroup

/dev/mapper/cl-root 27G 5.4G 22G 20% /

/dev/sda1 976M 325M 584M 36% /boot

/dev/md1 2.0G 6.0M 1.9G 1% /raid_1

/dev/md0 3.9G 16M 3.7G 1% /raid_0

/dev/md9 3.9G 16M 3.7G 1% /raid_linear

/dev/md5 3.9G 16M 3.7G 1% /raid_5

/dev/md10 3.9G 16M 3.7G 1% /raid_10

tmpfs 82M 1.2M 81M 2% /run/user/42

tmpfs 82M 4.0K 82M 1% /run/user/0

# RAID 10 상태 확인

[root@magicpipe ~]# mdadm --detail /dev/md10

/dev/md10:

Version : 1.2

Creation Time : Thu Jul 30 09:54:22 2020

Raid Level : raid10

Array Size : 4188160 (3.99 GiB 4.29 GB)

Used Dev Size : 2094080 (2045.00 MiB 2144.34 MB)

Raid Devices : 4

Total Devices : 2

Persistence : Superblock is persistent

Update Time : Sat Sep 5 17:54:01 2020

State : clean, degraded

Active Devices : 2

Working Devices : 2

Failed Devices : 0

Spare Devices : 0

Layout : near=2

Chunk Size : 512K

Consistency Policy : resync

Name : magicpipe:10 (local to host magicpipe)

UUID : 3e07ec07:d61b9ee8:ffd22cd4:b8013b5e

Events : 19

Number Major Minor RaidDevice State

0 8 161 0 active sync set-A /dev/sdk1

- 0 0 1 removed

2 8 177 2 active sync set-A /dev/sdl1

- 0 0 3 removed

- RAID 10 복구

- 동일 HDD로 교체 후 아래와 같이 기존 RAID 10 에 HDD를 추가 후 정상 동작을 확인 합니다.

# 교체한 신규 HDD 확인

[root@magicpipe ~]# fdisk -l

...

Disk /dev/sdk: 2 GiB, 2147483648 bytes, 4194304 sectors

Units: sectors of 1 * 512 = 512 bytes

Sector size (logical/physical): 512 bytes / 512 bytes

I/O size (minimum/optimal): 512 bytes / 512 bytes

Disklabel type: dos

Disk identifier: 0x3c58045c

Device Boot Start End Sectors Size Id Type

/dev/sdk1 2048 4194303 4192256 2G fd Linux raid autodetect

Disk /dev/sdl: 2 GiB, 2147483648 bytes, 4194304 sectors

Units: sectors of 1 * 512 = 512 bytes

Sector size (logical/physical): 512 bytes / 512 bytes

I/O size (minimum/optimal): 512 bytes / 512 bytes

Disk /dev/sdm: 2 GiB, 2147483648 bytes, 4194304 sectors

Units: sectors of 1 * 512 = 512 bytes

Sector size (logical/physical): 512 bytes / 512 bytes

I/O size (minimum/optimal): 512 bytes / 512 bytes

Disklabel type: dos

Disk identifier: 0x2bb9dd68

Device Boot Start End Sectors Size Id Type

/dev/sdm1 2048 4194303 4192256 2G fd Linux raid autodetect

Disk /dev/sdn: 2 GiB, 2147483648 bytes, 4194304 sectors

Units: sectors of 1 * 512 = 512 bytes

Sector size (logical/physical): 512 bytes / 512 bytes

I/O size (minimum/optimal): 512 bytes / 512 bytes

...

# 교체한 신규 HDD 파일시스템 설정 (/dev/sdl)

[root@magicpipe ~]# fdisk /dev/sdl

Welcome to fdisk (util-linux 2.32.1).

Changes will remain in memory only, until you decide to write them.

Be careful before using the write command.

Device does not contain a recognized partition table.

Created a new DOS disklabel with disk identifier 0xe49a2a2f.

Command (m for help): n

Partition type

p primary (0 primary, 0 extended, 4 free)

e extended (container for logical partitions)

Select (default p): p

Partition number (1-4, default 1): 1

First sector (2048-4194303, default 2048): ENTER

Last sector, +sectors or +size{K,M,G,T,P} (2048-4194303, default 4194303): ENTER

Created a new partition 1 of type 'Linux' and of size 2 GiB.

Command (m for help): t

Selected partition 1

Hex code (type L to list all codes): fd

Changed type of partition 'Linux' to 'Linux raid autodetect'.

Command (m for help): p

Disk /dev/sdl: 2 GiB, 2147483648 bytes, 4194304 sectors

Units: sectors of 1 * 512 = 512 bytes

Sector size (logical/physical): 512 bytes / 512 bytes

I/O size (minimum/optimal): 512 bytes / 512 bytes

Disklabel type: dos

Disk identifier: 0xe49a2a2f

Device Boot Start End Sectors Size Id Type

/dev/sdl1 2048 4194303 4192256 2G fd Linux raid autodetect

Command (m for help): w

The partition table has been altered.

Calling ioctl() to re-read partition table.

Syncing disks.

# 교체한 신규 HDD 파일시스템 설정 (/dev/sdn)

[root@magicpipe ~]# fdisk /dev/sdn

Welcome to fdisk (util-linux 2.32.1).

Changes will remain in memory only, until you decide to write them.

Be careful before using the write command.

Device does not contain a recognized partition table.

Created a new DOS disklabel with disk identifier 0x690bfae2.

Command (m for help): n

Partition type

p primary (0 primary, 0 extended, 4 free)

e extended (container for logical partitions)

Select (default p): p

Partition number (1-4, default 1): 1

First sector (2048-4194303, default 2048): ENTER

Last sector, +sectors or +size{K,M,G,T,P} (2048-4194303, default 4194303): ENTER

Created a new partition 1 of type 'Linux' and of size 2 GiB.

Command (m for help): t

Selected partition 1

Hex code (type L to list all codes): fd

Changed type of partition 'Linux' to 'Linux raid autodetect'.

Command (m for help): p

Disk /dev/sdn: 2 GiB, 2147483648 bytes, 4194304 sectors

Units: sectors of 1 * 512 = 512 bytes

Sector size (logical/physical): 512 bytes / 512 bytes

I/O size (minimum/optimal): 512 bytes / 512 bytes

Disklabel type: dos

Disk identifier: 0x690bfae2

Device Boot Start End Sectors Size Id Type

/dev/sdn1 2048 4194303 4192256 2G fd Linux raid autodetect

Command (m for help): w

The partition table has been altered.

Calling ioctl() to re-read partition table.

Syncing disks.

# RAID 10 에 교체된 신규 HDD를 추가

[root@magicpipe ~]# mdadm /dev/md10 --add /dev/sdl1 /dev/sdn1

mdadm: added /dev/sdl1

mdadm: added /dev/sdn1

# RAID 10 상태 확인

[root@magicpipe ~]# mdadm --detail /dev/md10

/dev/md10:

Version : 1.2

Creation Time : Thu Jul 30 09:54:22 2020

Raid Level : raid10

Array Size : 4188160 (3.99 GiB 4.29 GB)

Used Dev Size : 2094080 (2045.00 MiB 2144.34 MB)

Raid Devices : 4

Total Devices : 4

Persistence : Superblock is persistent

Update Time : Sat Sep 5 18:11:33 2020

State : clean

Active Devices : 4

Working Devices : 4

Failed Devices : 0

Spare Devices : 0

Layout : near=2

Chunk Size : 512K

Consistency Policy : resync

Name : magicpipe:10 (local to host magicpipe)

UUID : 3e07ec07:d61b9ee8:ffd22cd4:b8013b5e

Events : 51

Number Major Minor RaidDevice State

0 8 161 0 active sync set-A /dev/sdk1

5 8 209 1 active sync set-B /dev/sdn1

2 8 193 2 active sync set-A /dev/sdm1

4 8 177 3 active sync set-B /dev/sdl1

RAID 개념, 구축, 장애와 조치에 대한 내용을 알아보았습니다. 긴 글을 읽어주셔서 감사합니다.

'Linux' 카테고리의 다른 글

| [CentOS 8] 리눅스 기본 명령어 (0) | 2020.09.28 |

|---|---|

| [CentOS 8] 런레벨(Runlevel) 이란 (0) | 2020.09.25 |

| [CentOS 8] Disk 관리 | RAID 구축 (0) | 2020.07.31 |

| [CentOS 8] Disk 관리 | RAID 개념 (0) | 2020.07.23 |

| [CentOS 8] Disk 관리 | fstab 설정 (0) | 2020.07.21 |

댓글 영역